AuraQ has vast experience helping organisations find the gaps that can be filled to enhance their business. This could be to improve efficiency, integrate legacy systems or deliver new portals and strategic applications to create competitive advantage. Contact us to request a free, no obligation Gap Analysis.

Voice activated business processes

Starting from the early 1950s where a system was created to understand digits when spoken by one person’s voice, to today’s technical wonders of Apple’s Siri, Google Home and Amazon’s Alexa, voice recognition software has been around longer than we think. With that said, in a relatively short time it has evolved and expanded into what we see today.

With today’s commercial market seemingly targeted to only household use, is there any potential for using this technology for business use? The short answer is; of course there is! Who doesn’t want to be able to blurt a request out loud and have your very own AI assistant carrying out your demands? “Machine! Run my business successfully!” …Thankfully, I don’t think we are quite there yet.

If only it were so easy. Well, it is most definitely possible… A quick look into api.ai by Speaktoit reveals just how it could be done. In this example I’ll show you a basic leave request prototype.

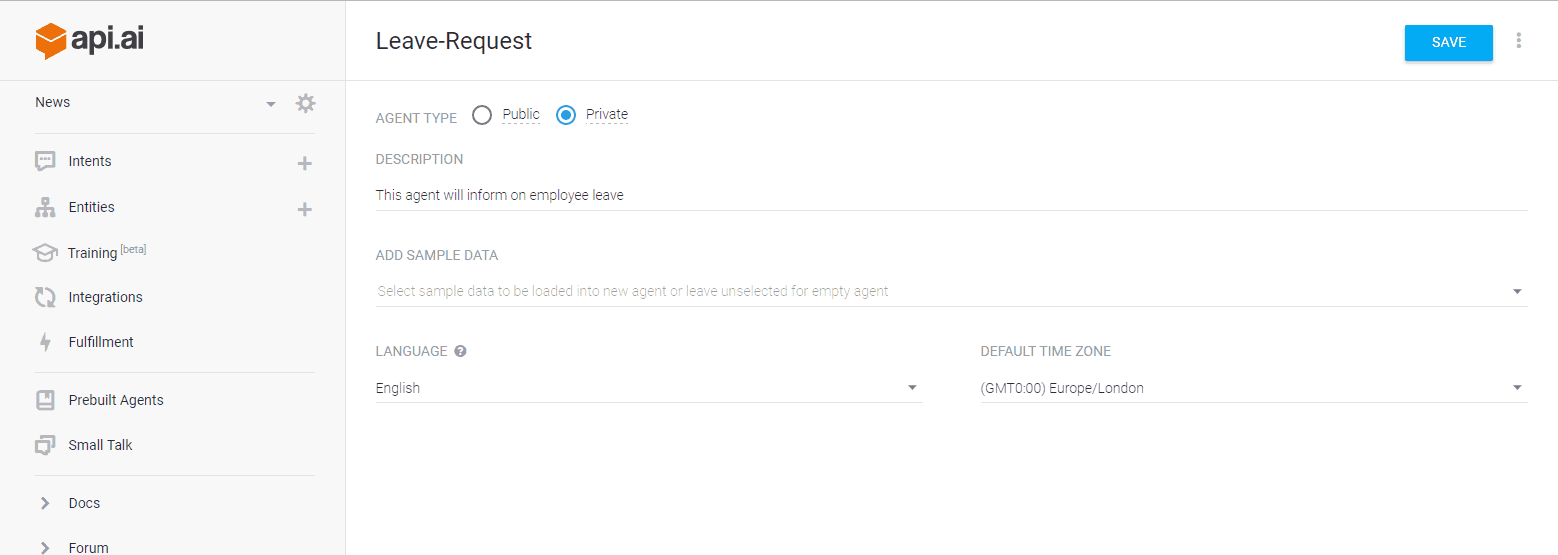

Firstly, we can go to console.api.ai and create a new Agent called “Leave-Request”. This is basically the project file and is a way of differentiating this behaviour from other Agents:

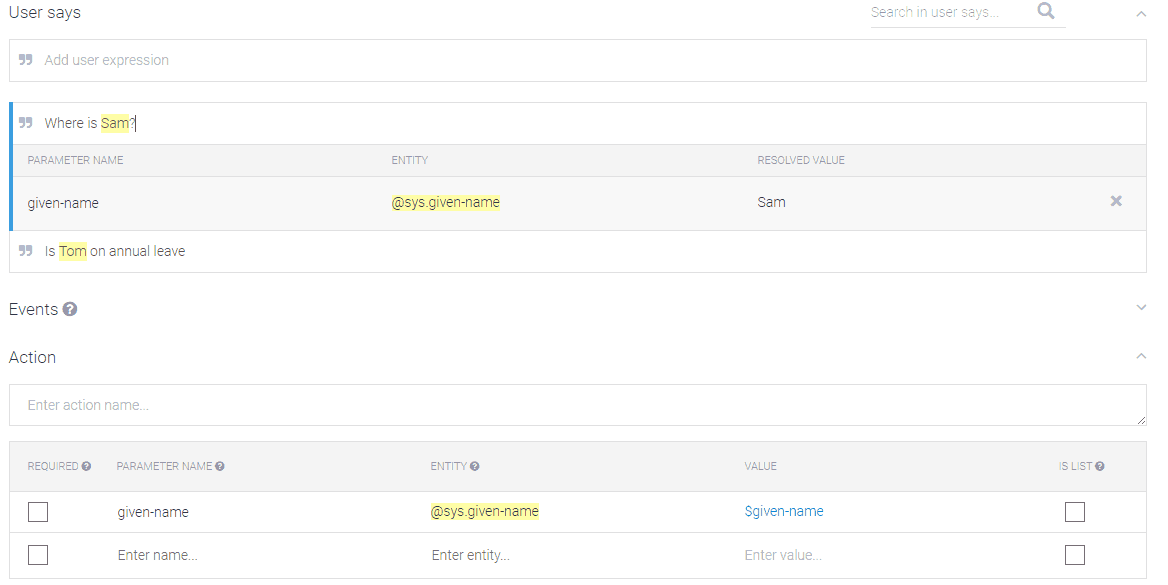

Next, we’ll create an “Intent”. An “Intent” covers the possible questions a user would ask and the responses to it. For this instance we only care about employee leave, so a few possible user expressions have been added.

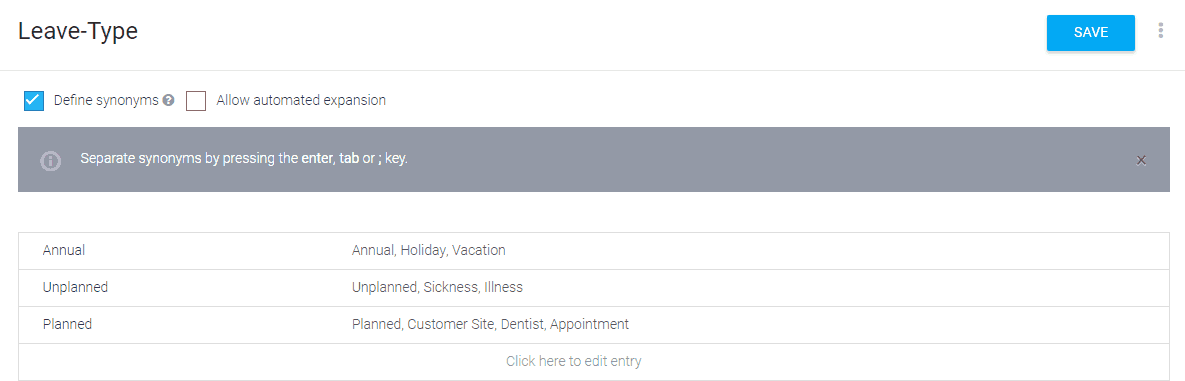

As you can see here, the names of the employees have been highlighted. They have been correctly assumed by api.ai itself to be names and has given them an “Entity”. An entity is basically an enumeration of a type of object. We can actually make our own entities if we wanted to. So let’s make one for types of leave:

So we’ve added types of leave and possible synonyms for that type, therefore when these words are said by the user they can be interpreted and the correct result can be returned.

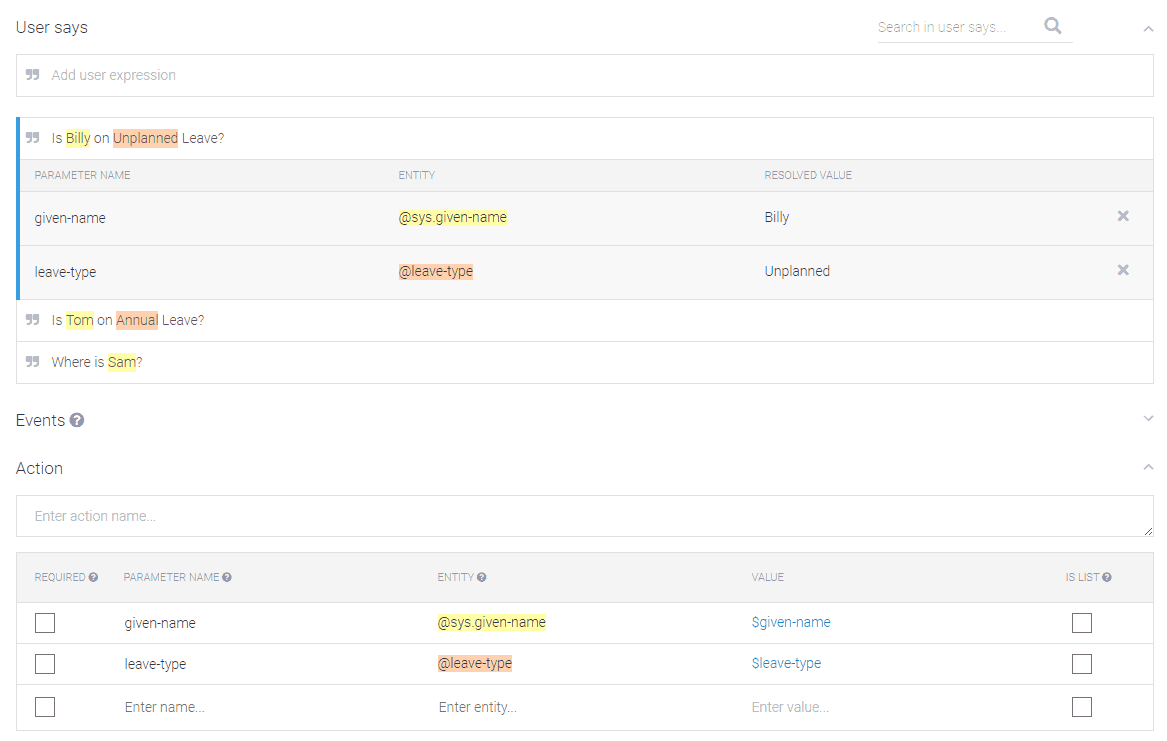

Back in the intent, we can see that when we create another user query, it has correctly assigned “Unplanned” to the type of “leave-type”:

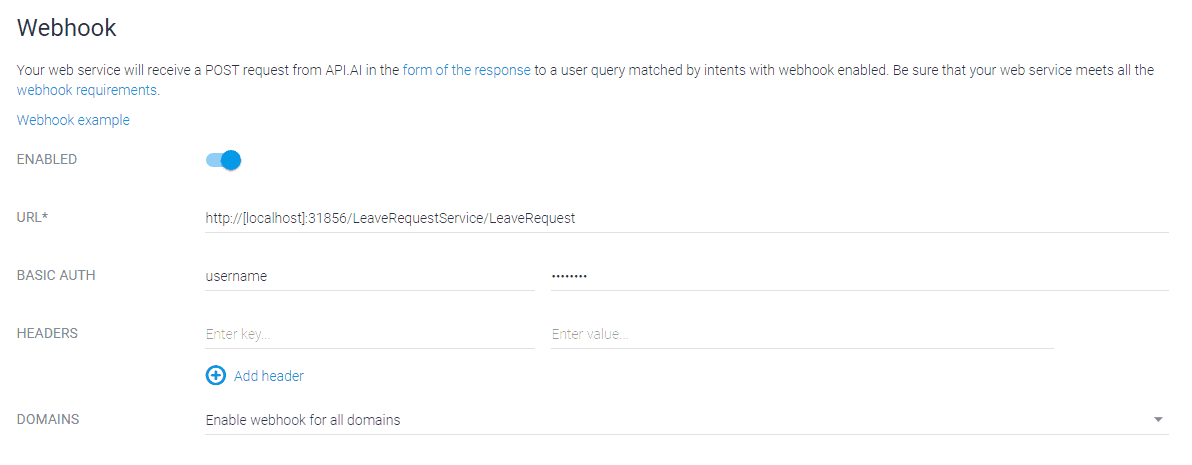

So, now we have an Agent, Intent and our own custom Entity set up, how can we return a response from our internal service? For this we can enable Webhook:

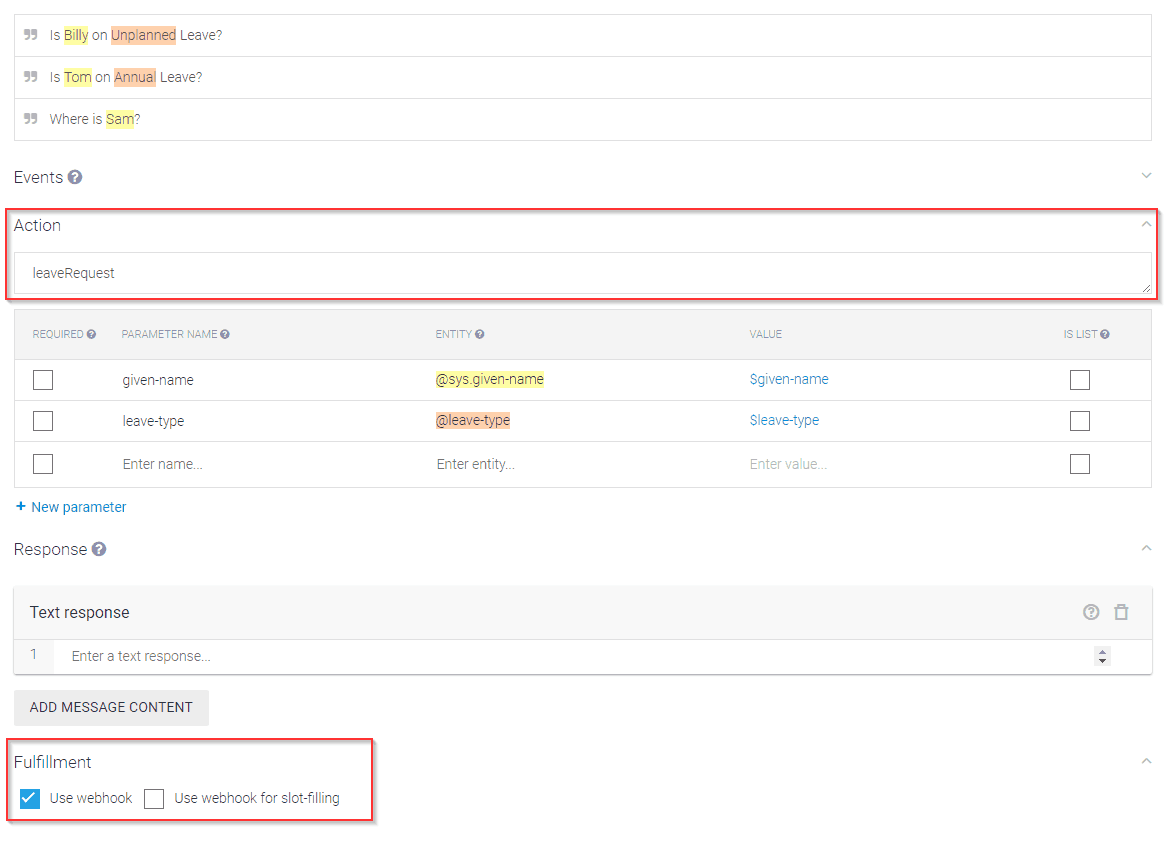

By using Webhook we can attach a web service URL for it to post to and return a JSON result from, thus if we were to call the service and pass over the user’s name we can find out their leave status. So if we had a call to the database to find out where a person was within the service we could return this value in a JSON as a “fulfilment”. By checking the “Use webhook” checkbox and adding an action to the intent (this should match up with the method name) we can enable this for our intent:

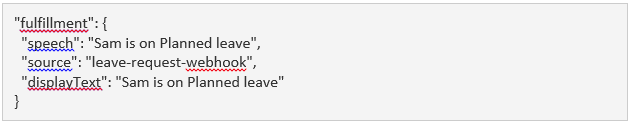

Now, when we send a request to the Webhook enabled service we will return a JSON response that will look a little something like this:

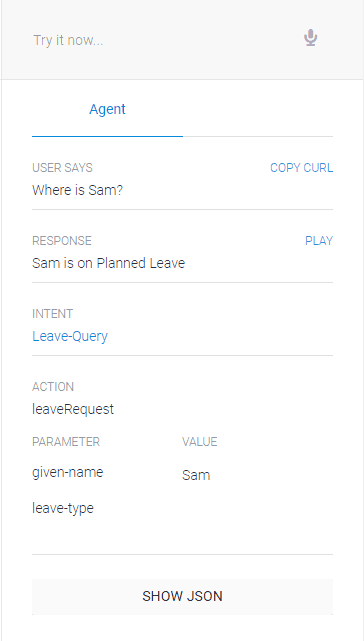

From here API.AI will interpret the message a send out either a text or voice response to the user as shown in the image below:

And there is a really simple process that could save a few minutes of manual effort looking through an internal system to get such a small amount of information.

Obviously, there are some downsides to using voice recognition software in a business environment. The big one being privacy and security. There has been enough publicity around the big commercial voice recognition products such as Google Home and Alexa that show they aren’t exactly secure, so this would mean a business would need their own internal and secure software in which to run these commands from – preferably one that isn’t constantly listening to your every word. This would of course mean that you need some sort of switch to activate the software which makes it seem a little bit less efficient than you’d like to imagine.

Another issue with voice recognition is that it can sometimes struggle to understand what the user is saying, especially if the user has a thick accent. This can make using voice recognition very frustrating.

There’s also the reality that every possible request isn’t covered by the intent content. Which isn’t so much of a problem as more user requests can be added and these can be automatically added through “machine learning” that can be enabled in API.AI… but this is a blog, not an API.AI tutorial. So, if you are interested in learning more about API.AI or just interested in giving it a go. Then head on down to: https://api.ai/ to get started.

It’s important to remember that voice recognition software and assistants are constantly developing and improving. What I’ve shown you is just the very tip of the iceberg. When looking at the bigger picture we may be a little past the tip of the iceberg with this technology but it’s exciting to think about the possibility of voice recognition assistants in the future… and equally unsettling.